Overview

For a recent client project, I needed to develop a one-way integration from a PostgreSQL database into Salesforce. Having had recent sucess with AWS as a platform for Salesforce integration, it was an easy choice to build on AWS again. This integration involved processing about 50,000 records a day.

The integration came together easily, so I wanted to share the AWS tools used and a few tips and tricks picked up along the way, in the hopes that you find it helpful in your own Salesforce integration work.

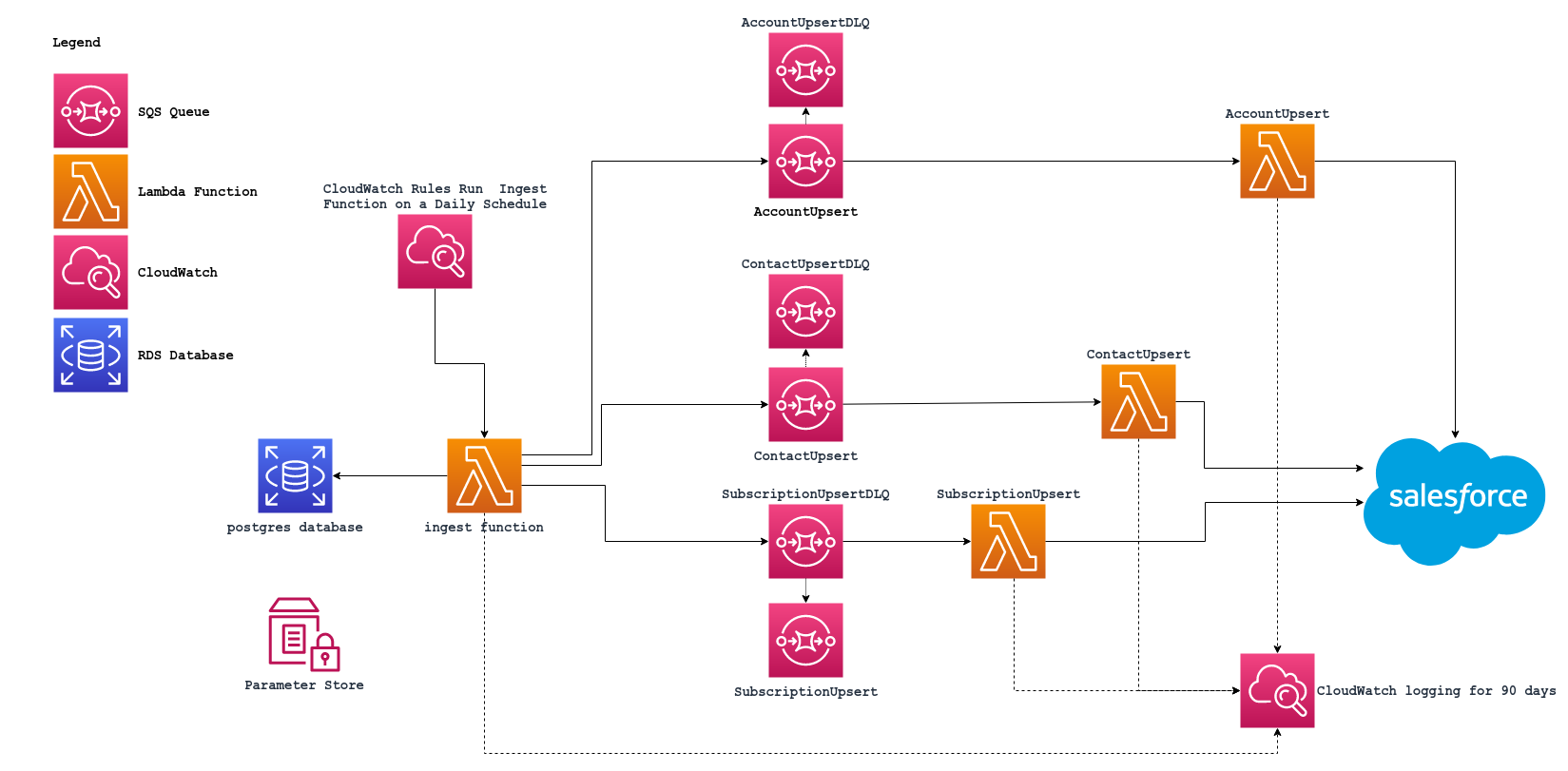

The integration combined four AWS tools:

- Lambda Functions host the integration processing logic

- Simple Queue Service (SQS) provides resiliance and a retry mechanism

- System Manager Parameter Store manages application state between runs of the integration and is also used to manage secrets

- Cloudwatch is used for logging, and Cloudwatch Rules are used to schedule the integration processing

Integration Process

An ingest Lambda Function runs for each integrated object, scheduled by a Cloudwatch Rule. It first retrieves needed secrets and the timestamp of last record processed from the Parameter Store, then retrieves all of the rows from the database that have been modified since the timestamp. It creates an SQS message for each record in the appropriate queue. As a final step, it updates the Parameter Store with the new timestamp value for the object.

Each SQS Queue has a related Lambda Function triggered by new messages in the queue. This Lambda Function executes the processing and performs an upsert to Salesforce via the REST API.

Messages that cannot be successfully processed after 10 attempts are added to the dead-letter queue associated with each queue for inspection. The queues are configured with a visibility timeout of several minutes. If a message fails and has to be reprocessed due to a temprorary issue - for example due to a record locking error - the visibility timeout creates a delay that typically allows the message to be processed successfully on a subsequent attempt.

Figure 1: Integration Overview

Figure 1: Integration Overview

Tips, Learnings, and Ideas

- This design is limited in how many rows it can process by the 15-minute execution limit on Lambda Functions. The ingest function is only able to accomplish so much work in that time. The ingest code is multithreaded to increase its performance, and the ingest Lambda Fuction is configured with extra resources compared to the other Lambda Functions. This design can handle the needed workload with ease, but I call this out in case your scenario requires many more rows to be processed, or includes some time-intensive database queries.

- In the current design, all of the intelligence about what message to route to what queues is contained in the ingest function. An alternative design would be to take advantage of AWS EventBridge to set up an event bus to contain this logic and route messages to the appropriate queues, simplifying the ingest function.

- In our actual setup, the RDS postgres database resided in a separate AWS account from the integration components - if this is your scenario you need to configure VPC peering so that your ingest Lambda Function can access the database.

- To move the integration from running on a schedule to being near-real-time, we could explore eliminating the database, and having events in the source system trigger messages to EventBridge, to be routed to the appropriate queues and processed. This would require, of course, the ability to add custom business logic to the source system.