Wrangling customer data often consumes a large part of a Salesforce administration team’s capacity. This includes transforming and moving data between Salesforce and other systems, as well as normalizing, verifying, and deduplicating customer data within Salesforce.

Accordingly, a host of offerings from Salesforce and third party software vendors are available to help administrators with the wrangling – addressing varying swaths of the problem space at various price points. As a general rule, I would steer clients to evaluate these solutions first when considering how to equip their Salesforce teams to handle data tasks. Many of these products are full-featured and mature, and have feature, cost, and maintainability advantages over custom solutions.

This blog post, however, is not about third party-applications for data management…

I recently spent a bit of time wondering how to approach a data management task for a client without depending on a third-party application – perhaps a client with highly specialized needs, or a one-off need that didn’t merit a new software investment.

Could I put together a kit of flexible, free tools to tackle a wide array of data problems in Salesforce? Perhaps using Ruby, my programming language of choice?

It turns out, it was not hard at all.

The Kit

Platform

The heart of my new data toolkit is the Kiba gem from Thibaut Barrere. This is a super easy to use, flexible ETL framework for Ruby. It allows you to define sources, destination, and transformations.

A “Source” is a Ruby class that implements a constructor and an “each” method, to connect to a data source and return rows one by one.

“Transformations” are either classes or blocks of Ruby code that transform data on its way from source to destination, or filter it out if needed.

A “Destination” is another Ruby class that implements a constructor and a “write” method for each row that it receives, to send the data to the destination data source.

You can think of this as a “pipeline” in that the data starts at the source, flows through each of the transformations in order, then the transformed data flows into the destination.

Kiba also includes the ability to define pre/post processing code around an ETL job, as well as logging as needed. Kiba jobs can be run from the command line, or programmatically. Since it is all Ruby, tests can be written and run to confirm all is working as desired.

Very cool! So with our platform in hand, we need to turn to some additional Ruby gems (i.e. libraries) to round out our kit.

Salesforce Gems

Two Ruby gems facilitate connecting our Kiba platform to Salesforce:

Restforce provides an easy way to authenticate to Salesforce and interact with the REST API from Ruby. Note that you will need to configure a Salesforce Connected App for Restforce to use to connect.

Salesforce_Bulk_Api allows you to interact with the Salesforce Bulk API from Ruby. It uses the Restforce gem for authentication. We’re doing data management, right? We might be working with a lot of rows – so we will need the Bulk API.

Data Transformation Gems

Sometimes it can be a joy to work with Salesforce data outside of Apex. So many useful libraries that can be easily brought to bear on whatever problem we are facing… Here are a couple of interesting Ruby gems that I incorporated into this solution – I am sure there are many more that I still have to discover:

Phonelib provides international phone number validation and formatting, using Google’s libphonenumber library, in Ruby. Not only can this library be used to standardize phone number formatting for your Salesforce records, but we can flag any phone numbers that are not valid for manual review.

Normalize_Country standardizes country names – Let’s all agree that “USA”, “U.S.”, “US” and “United States of America” all equal “United States”, OK?

Working Database

Working with data, you often need a temporary place to store data while you manipulate it or combine it.

For my Kit, I combined a PostgreSQL database running on Amazon AWS’s RDS service with the Ruby Pg gem (which provides connectivity to PostgreSQL databases from Ruby). It was super easy to setup, and a smaller developer-size instance costs a whopping $0.018 per hour to run. Obviously this can be scaled up as needed.

Putting It Together

OK – Now that I have my kit – how do I make it work?

Let’s look at a basic example – connecting to Salesforce, extracting Account information, doing a couple of simple transformations, and then writing that data to a PostgreSQL database.

First, the Source

Establishing a connection using the Restforce gem is easy:

And yes, I am aware that storing credentials in source is a bad idea. This is not production code.

Within our Kiba source class, we need to define our constructor, which submits our query to the Salesforce Bulk API, and our each method, which returns the results one row at a time.

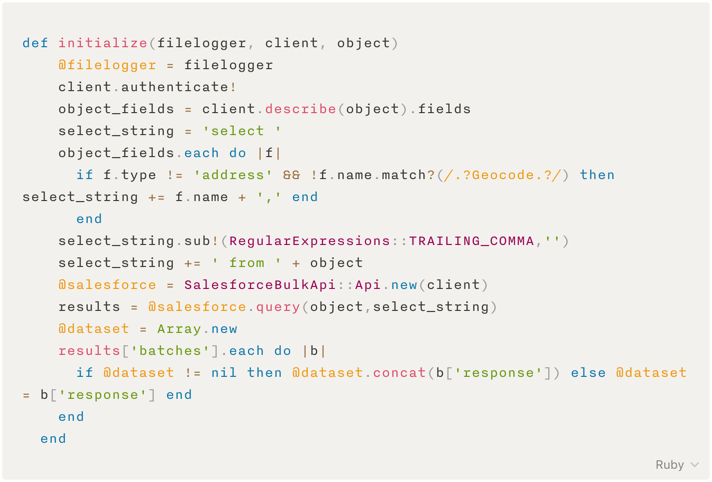

The constructor:

What is happening here?

We authenticate the client passed to the source class We retrieve the Salesforce describe information for the object passed in as a parameter (for our case, Account), then loop through the fields of the Account, building a SOQL query statement. We omit address and geocode fields, as they are not supported by the Bulk API. We submit the SOQL query We loop through the returned batches, appending the returned rows to an array type class instance variable @dataset. The each method:

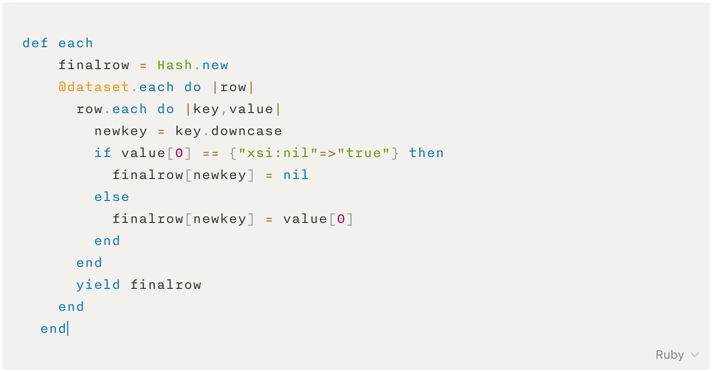

What is happening here?

We are taking one row at a time from the @dataset variable We are cleaning up the representation of null values, and yielding the row

Second, the Transformations

Here is a sample transformation:

What is happening here?

Each row is passed through this block of transform code on its way from source to destination. The normalize_country library is being called and passed the billing country. If the library recognizes the country, we replace the original value with the standard value provided by the library. If not, we leave the original value intact. Several of these transformations can be strung together to manipulate the data in different ways. Note at the end of the block the ‘row’ is returned. If we returned a ‘nil’ the row would drop out, and not be processed by any subsequent transformations, or be passed to the destination.

Lastly, the Destination

For a data cleansing project, you might imagine using Salesforce for both the source and the destination. Essentially pulling data out, scrubbing it in some way, then pushing it back in. Our scenario, writing to an external database, introduces the additional challenge of aligning the schemas. As we saw in the source section, we are essentially pulling all of the fields from the Salesforce object that the Bulk API can read. What if that includes new fields that are not in our destination database yet?

In my work on this toolkit, I developed an additional Ruby class that is invoked by the Kiba pre-processor to sync the database schema with Salesforce. If new fields exist in Salesforce, columns are added as needed to the corresponding PostgreSQL table. If they have been removed, the corresponding columns are removed from the table. I am not diving any deeper into this now, to keep this already overly long blog post from growing longer.

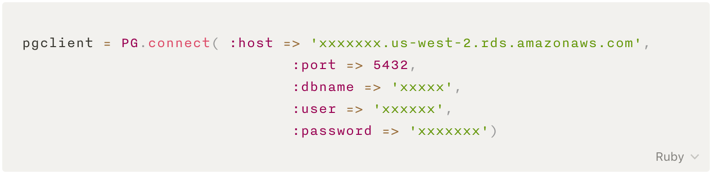

So, back to the destination – which, as you recall, needs a constructor and a “write” method. But first we need to establish a connection to the database – easy with the Pg gem:

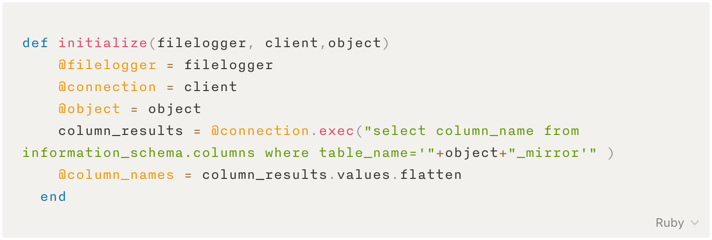

Our constructor:

What is happening here?

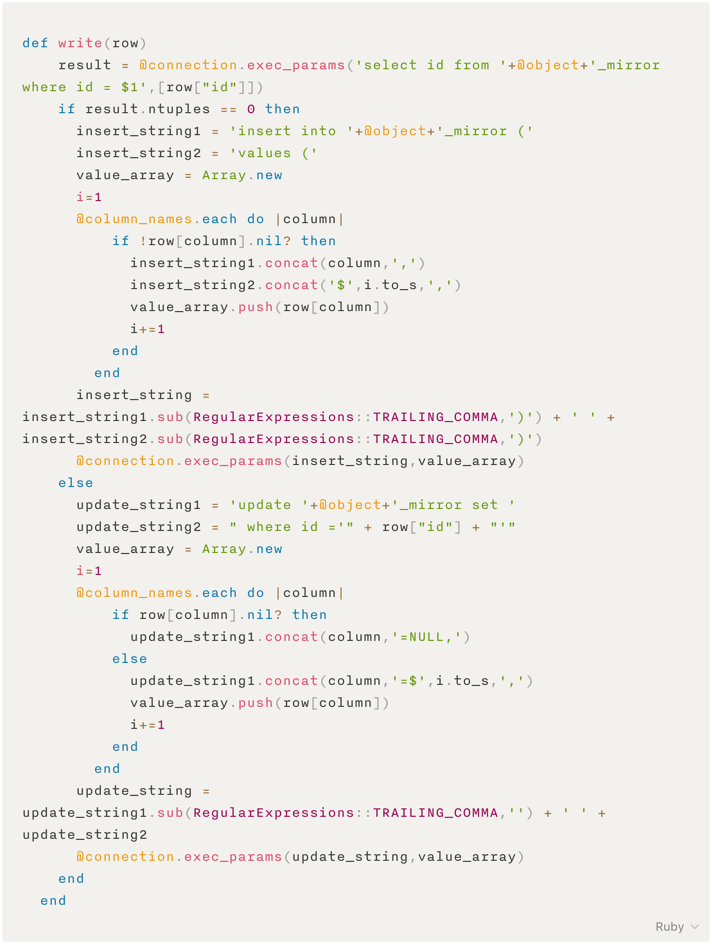

We are just pulling out the column names from the PostgreSQL database, using the Pg gem. We’ll need these later. Our write method:

What is happening here?

First we check to see if we already have a row in our table for this record from Salesforce. This will determine if we need to use an “insert” or “update” statement. PostgreSQL does support an upsert operation – I should streamline this code by using that. Build the appropriate SQL statement to be passed to PostgreSQL Use the Pg gem to pass the assembled statement to the database.

The Results

That’s it!

With a small amount of Ruby code, some great gems from the Ruby community, we have a platform to build a robust, flexible data solution for Salesforce. We can use it to move data around, or clean it “in place”. We can do whatever we need to do within the limits of Ruby and the Salesforce APIs.

Future Research/Enhancements

Duplicate Detection and Auto-Merging

I’ve started some research into how to use this toolkit for de-duping Salesforce data. I’ve identified some promising libraries and angles for “fuzzy matching” to try and identify duplicates in customer data. I also think I have a good scheme in mind to use the toolkit to identify duplicate records and then create a custom object in Salesforce for each duplicate (think of it as a “merge work order”), and then using a Salesforce batch apex job to work through the work orders to merge the duplicates.

I will try to dive into this is a subsequent blog post.

Heroku

Running this type of job on the client is fine for development and small one-off jobs, but what about larger jobs or recurring jobs?

I want to explore developing a solution using this toolkit, and then deploying it to Heroku to be run there, either once or on a regular schedule. Need to think about logging strategy, managing and protecting credentials with Heroku environment variables, etc.

Heroku also offers PostgreSQL as a service, so it would make sense to use that rather than AWS.

Maybe another subsequent blog post…

Scaling and Performance

Working in the confines of a developer edition instance, I have not been able to test how this toolkit fares when processing hundreds of thousands or even millions of records. So the jury is still out on the practicality of the tool until I can throw some real-world data volumes at it.